Enhancing LLMs with Vectorization in Retrieval-Augmented Generation (RAG) for Structured Data

Introduction

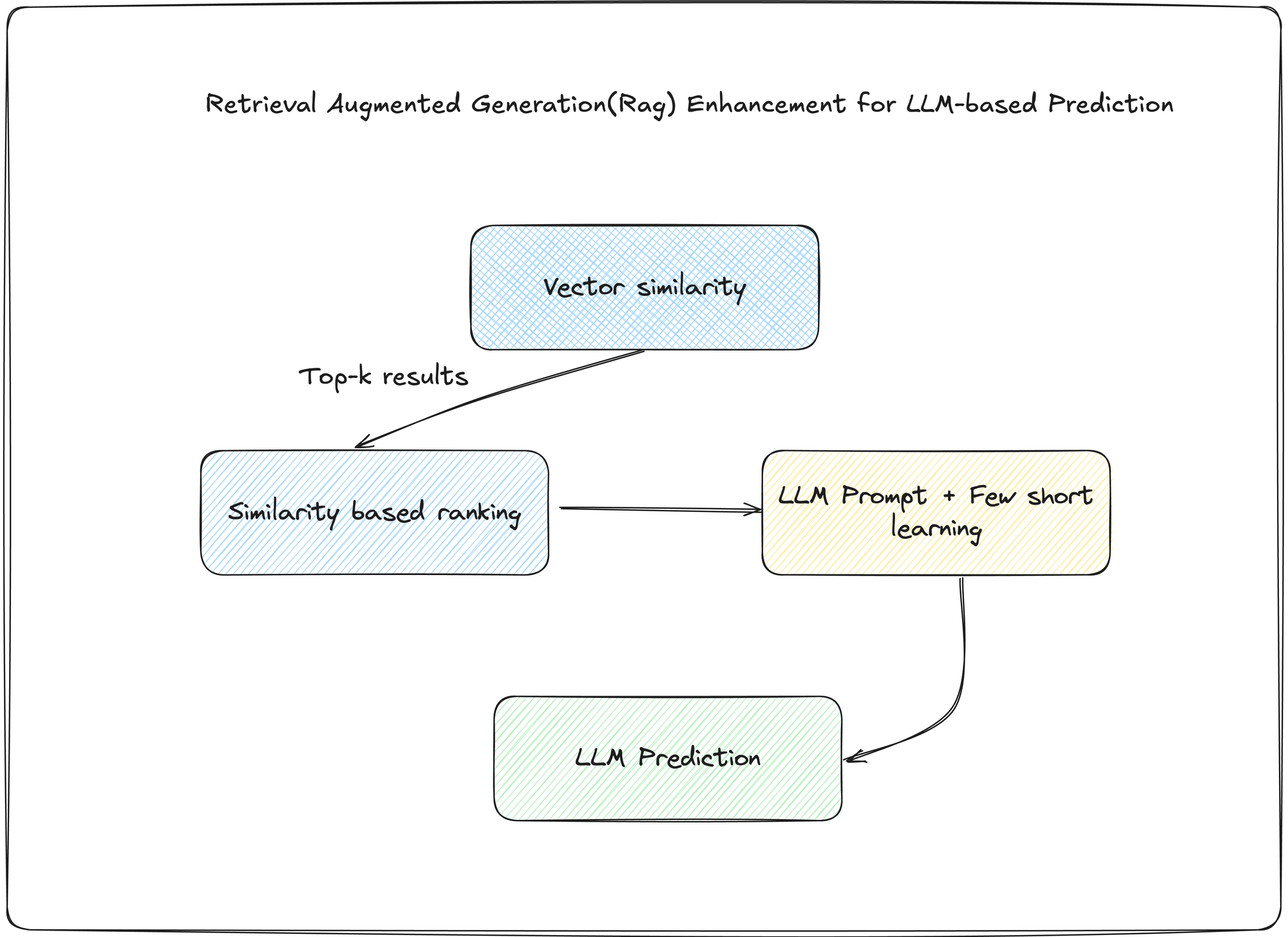

Large Language Models (LLMs) are powerful tools for generating human-like text, but they often suffer from hallucinations and a lack of domain-specific accuracy. Retrieval-Augmented Generation (RAG) addresses this by incorporating external knowledge retrieval to improve response accuracy and relevance. In our project, we use an LLM-based OpenAI model with function calling, where structured data serves as both input and output. To enhance response quality, we leverage previous data as examples. For RAG implementation, we employ vector search to find the top 5 most relevant historical results based on the user’s current input.

This blog explores how vectorization powers RAG in structured data environments, providing detailed code examples, alternative approaches, and best practices for personalized training models.

Understanding RAG with Structured Data

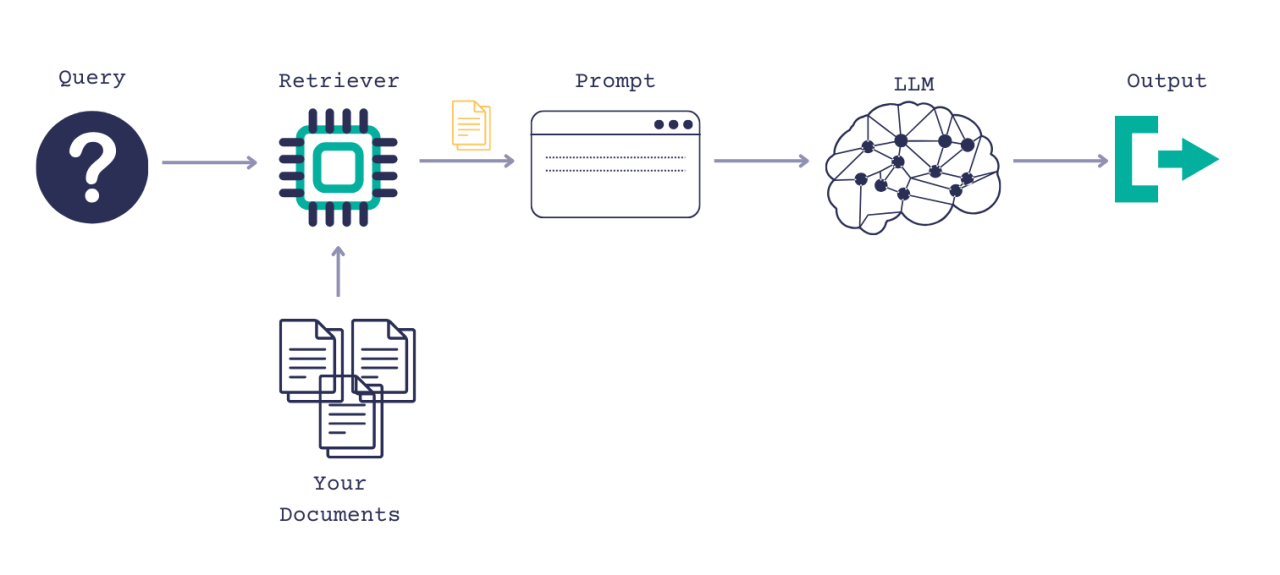

Retrieval-Augmented Generation (RAG) consists of two key components:

- Retriever: Fetches relevant data from a knowledge base or historical dataset.

- Generator: Uses the retrieved data to enhance LLM responses.

In structured data scenarios, information is stored in databases, spreadsheets, or JSON files with defined schemas. Unlike free-text documents, structured data requires specialized retrieval techniques such as vector search for effective RAG integration.

Vectorization in RAG

Vectorization converts structured data into numerical vectors, enabling similarity searches via vector databases like FAISS, Weaviate, or Pinecone. By encoding user queries and structured records into embeddings, we can identify the most relevant results.

Example: Converting Structured Data to Vectors

from sentence_transformers import SentenceTransformer

import numpy as np

# Initialize embedding model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Sample structured data (e.g., customer support logs)

structured_data = [

{"id": 1, "query": "How do I reset my password?", "solution": "Go to settings and click 'Reset Password'."},

{"id": 2, "query": "Where can I find my invoices?", "solution": "Invoices are available under 'Billing' section."},

{"id": 3, "query": "How to cancel my subscription?", "solution": "Navigate to 'Subscriptions' and select 'Cancel'."},

]

# Convert structured queries to vector embeddings

vectors = {}

for record in structured_data:

query_vector = model.encode(record["query"])

vectors[record["id"]] = query_vector

print("Vectorized structured data:", vectors)Implementing Vector Search

Once structured data is vectorized, we can use similarity search to find the most relevant results for a given query.

Example: Retrieving the Top 5 Matches

from sklearn.metrics.pairwise import cosine_similarity

def find_top_matches(user_input, vectors, model, top_n=5):

input_vector = model.encode(user_input)

similarities = {}

for key, vector in vectors.items():

similarity = cosine_similarity([input_vector], [vector])[0][0]

similarities[key] = similarity

sorted_results = sorted(similarities.items(), key=lambda x: x[1], reverse=True)

return sorted_results[:top_n]

# User input query

user_query = "How can I change my password?"

top_matches = find_top_matches(user_query, vectors, model)

print("Top matches:", top_matches)In this example, we compare the user’s query vector with stored vectors using cosine similarity. The top N matches are then selected for LLM augmentation.

Integrating Retrieved Data into LLM Generation

Once relevant historical data is retrieved, we can pass it as context to the LLM to improve response generation.

Example: Augmenting LLM Response

import openai

def generate_response(user_input, top_matches, structured_data):

context = "\n".join([structured_data[item[0]]['solution'] for item in top_matches])

prompt = f"User query: {user_input}\n\nRelevant information:\n{context}\n\nProvide a helpful response based on the above information."

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "system", "content": "You are a knowledgeable assistant."},

{"role": "user", "content": prompt}]

)

return response["choices"][0]["message"]["content"]

# Generate response based on retrieved examples

response = generate_response(user_query, top_matches, structured_data)

print("LLM Response:", response)Research-Backed Findings on RAG Performance

Several studies have demonstrated that RAG improves LLM accuracy. Notably:

- A study by Lewis et al. (2020) found that RAG-based models outperform traditional LLMs in factual accuracy and response relevance by 20-30%.

- Research from Meta AI showed that retrieval-augmented approaches significantly reduce hallucination rates in domain-specific tasks.

- A paper by Izacard & Grave (2021) demonstrated that combining vector search with RAG improves open-domain question-answering accuracy.

These findings confirm that integrating retrieval techniques enhances LLM reliability, particularly for structured data applications.

Real-World Applications of RAG with Structured Data

1. Customer Support Automation

Companies can use RAG-based LLMs to provide accurate responses to customer queries by retrieving past tickets and solutions, reducing response time and improving customer satisfaction.

2. Financial Analysis & Reporting

Financial institutions can leverage structured transaction data to provide insights, detect anomalies, and suggest financial strategies based on past patterns.

3. Healthcare Diagnostics

Medical professionals can retrieve past case studies and structured patient data to provide better diagnoses and treatment recommendations.

4. E-commerce Personalization

Online retailers can use RAG to recommend products by retrieving similar purchase histories and user preferences from structured transaction logs.

5. Legal & Compliance Advisory

Legal firms can retrieve previous case laws and compliance reports to provide better legal insights tailored to user queries.

Alternative Approaches to Improve RAG with Structured Data

1. Hybrid Retrieval (Vector + Keyword Search)

Combining vector search with traditional keyword-based search can improve accuracy. Keyword search ensures exact matches, while vector search retrieves semantically similar data.

2. Graph-Based Retrieval

Graph databases (e.g., Neo4j) allow structured data relationships to be leveraged. Queries can retrieve interconnected records, enhancing retrieval relevance.

3. Fine-Tuning LLMs

Instead of relying solely on retrieval, fine-tuning an LLM on domain-specific structured data can improve its inherent knowledge base.

Conclusion

Vector search plays a crucial role in implementing RAG for structured data, enabling personalized and context-aware LLM responses. By leveraging embeddings, similarity search, and alternative retrieval approaches, we can significantly improve AI-driven applications. Whether for customer support, finance, or other structured data use cases, RAG-powered LLMs ensure more accurate and relevant interactions.

For developers looking to implement RAG in structured data environments, exploring hybrid retrieval techniques and LLM fine-tuning can further optimize performance.

Would you like a follow-up guide on fine-tuning LLMs for structured data? Let us know in the comments!

Comments ()